a holographic video image of the same geometry. An interacting

|

wendy

plesniak &

|

|||||||||||

|

ravikanth

pappu

|

|||||||||||

|

|||||||||||

|

|||||||||||

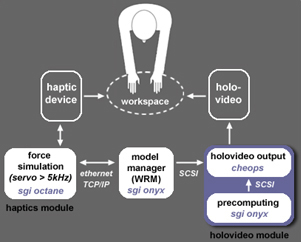

| Lathe has three subsystems: the haptics |  |

||||||||||

| module, the holovideo module, and the | |||||||||||

| model manager. The haptics module runs | |||||||||||

| our force simulation and drives the force- | |||||||||||

| feedback device. The holovideo module | |||||||||||

| precomputes holograms, and drives rapid | |||||||||||

| local updates on the holovideo display. | |||||||||||

| The model manager keeps the visual and | |||||||||||

| haptic representations in sync. | |||||||||||

| The force simulation runs at an average | |||||||||||

| servo rate of 5kHz, and the holovideo | |||||||||||

| update rate is 10fps. The system operates | |||||||||||

| with 1s of lag between haptic and hologram | |||||||||||

| updates. Thus, typically, as a person lathes, | |||||||||||

| the surface is immediately felt to change | |||||||||||

| and seen to change a second later. | |||||||||||

|

|||||||||||

| Lathe achieves near-real-time holovideo updates by | |||||||||||

| using a set of precomputed holograms of cylindrical | |||||||||||

| stocks having different radii. The final image is | |||||||||||

| then assembled from appropriate regions of this | |||||||||||

| precomputed hologram set, and only changed in | |||||||||||

| regions where the model has been affected by a | |||||||||||

| person's carving. By making line-level changes, we | |||||||||||

| avoid recomputing the entire 36MB holographic | |||||||||||

| fringe pattern at each update. | |||||||||||

| Using such specific precomputed elements permits | |||||||||||

| only a limited set of images to be assembled. Our | |||||||||||

| third holo-haptic experiment, Poke, addresses | |||||||||||

| this problem by using more generalized precomputed | |||||||||||

| fringes to assemble a more arbitrary final image. | |||||||||||

|

|||||||||||

| The force model is given by a 1D | |||||||||||

| NURB curve which sweeps out | |||||||||||

| a surface of revolution. Pressing | |||||||||||

| into the model with the Phantom | |||||||||||

| stylus causes a force to be displayed | |||||||||||

| back to the hand; pressing with | |||||||||||

| enough force causes model control | |||||||||||

| points to be displaced inward and | |||||||||||

| the stock deforms uniformly | |||||||||||

| around its circumference. | |||||||||||

|

Finally,

the completed model data

|

|

||||||||||

|

can

be dispatched to a 3D printer to

|

|||||||||||

|

produce

a physical hardcopy of the

|

|||||||||||

|

carved

design. The model shown to

|

|||||||||||

|

the

right was printed on a Stratasys

|

|||||||||||

|

3D

printer. The entire process serves

|

|||||||||||

|

as

one example of a future design

|

|||||||||||

|

and

prototyping pipeline.

|

|||||||||||

| Selected references : | |||||||||||

| Ravikanth Pappu and Wendy Plesniak, "Haptic interaction with holographic video images", Proceedings | |||||||||||

| of the IS&T/SPIE's Symposium on Electronic Imaging, Practical Holography XII, January 1998. | |||||||||||

|

Wendy

Plesniak and Ravikanth Pappu, "Coincident

display using haptics and holographic video",

|

|||||||||||

|

Proceedings

of ACM SGICHI Conference on Human Factors in Computing Systems,

April 1998.

|

|||||||||||

|

Wendy

Plesniak and Ravikanth Pappu, "Spatial

interaction with haptic holograms", Proceedings

of

|

|||||||||||

|

the

IEEE International Conference on Multimedia Computing and Systems

(ICMCS'99), June 1999.

|

|||||||||||

|

Wendy

Plesniak and Ravikanth Pappu, "Tangible,

dynamic holographic images", in Kuo, C.J. (Ed.),

|

|||||||||||

|

3-D

Holographic Imaging. Wiley-Interscience (invited, in press).

|

|||||||||||

| Sponsors of this work include Honda R&D Company, | |||||||||||

| NEC, IBM, the Digital Life Consortium at the MIT | |||||||||||

| Media Laboratory, the Office of Naval Research | |||||||||||

| (Grant N0014-96-11200), Interval Research Corporation, | |||||||||||

| and Mitsubishi Electric Research Laboratories. | |||||||||||