The Spatial Imaging group at the MIT Media Lab developed new technology and interfaces for holographic and other spatial displays. It was directed by Stephen Benton, who passed away November 9, 2003. Work on electronic capture and display of 3-D images has continued as a part of the work of the Media Lab's Object-Based Media group.

Holographic stereograms are generated from precomputed fringe elements and a set of rendered or optically-captured parallax views of a scene.

|

|

||||||

|

|

Some of our work in computing holographic stereograms focuses on computing basis fringes, encoding them, and generating rendering schemes to match reconstruction geometries. A general overview of one way holographic stereograms can be computed is given below. |

|||||

| |

||||||

|

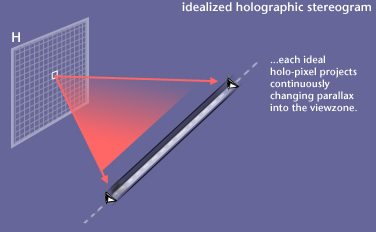

An idealized horizontal-parallax-only (HPO) stereogram, shown at the left, would reconstruct continuous parallax in the horizontal direction. Each holographic stereogram "pixel" would project the same information that a "live" scene would, to a viewer in any part of the viewzone.

|

|||

|

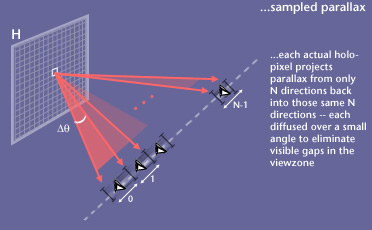

Since we can't actually encode infinite parallax, scene parallax is captured from a finite set of directions, and is then re-projected back in those same capture-directions. In order to prevent gaps between parallax views in the viewzone, each view is uniformly horizontally diffused over a small angular extent.

|

|

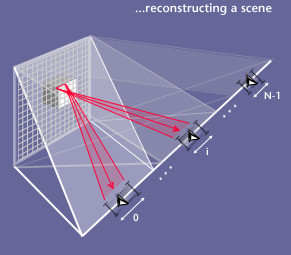

Two things are needed to generate a holographic stereogram in this fashion: a set of N images that describe scene parallax, and a diffraction pattern that relays them in N different directions as described above. First, we compute a set of N diffractive elements called basis fringes. When illuminated, these fringes redirect light into the viewzone as shown at the left. These diffractive elements are independent of any image information, but when one is combined with an image pixel value, it directs that pixel information to a designated span in the viewzone.

|

|

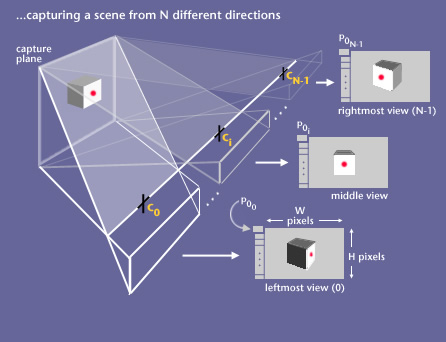

To capture or render scene parallax information, cameras are positioned along a linear track, with the view normal also normal to the capture plane. N views are generated from locations along the track that correspond with center output directions of the basis fringes. In this type of HPO computed stereogram, correct capture cameras employ a hybrid projection -- perspective in the vertical direction and orthographic in the horizontal. Conventional perspective views will lead to slight distortion in the reconstructed images.

|

|

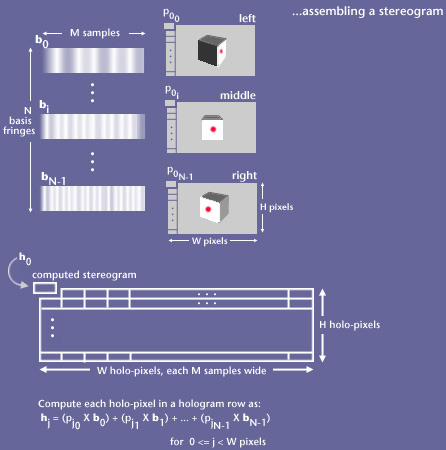

Once N parallax views have been generated, they are combined with the N pre-computed basis fringes to assemble a holographic stereogram. (The same basis fringes can be used for any sweep of parallax views generated with the same capture geometry.) Each holo-pixel is computed as a sum of basis fringes scaled by corresponding image pixel values as shown at the left. The resulting holo-pixel sends N different image pixels in N different directions, when properly illuminated.

|

|

What results is a kind of light-field reconstruction of the captured scene. The pixels of each parallax view are projected in the same direction from which they were captured, and uniformly spread a little to prevent dead space in the viewzone. When a viewer observes the reconstructed image, sampled scene parallax information is relayed to each eye, and this stereo view changes appropriately and smoothly with side-to-side head movement. Since the display has no vertical parallax, distortion-free viewing is available only at the correct viewing distance, as shown to the left.

|

|

SELECTED REFERENCES: Mark Lucente, "Diffraction-Specific Fringe Computation for Electro-Holography", Ph.D. Thesis, Dept. of Electrical Engineering and Computer Science, Massachusetts Institute of Technology, September 1994. Michael Halle, "The Generalized Holographic Stereogram", S.M. Thesis, Program in Media Arts and Sciences, Massachusetts Institute of Technology, February 1991. Ravikanth S. Pappu, et. al., "A generalized pipeline for preview and rendering of synthetic holograms," Proceedings of the IS&T/SPIE's Symposium on Electronic Imaging, S.A. Benton, ed., Practical Holography XI, February 1997. Mark Lucente and Tinsley Galyean, "Rendering Interactive Holographic Images" Proceedings of SIGGRAPH '95 (Los Angeles, CA, Aug. 6-11, 1995), pp. 387-394. J. A. Watlington, Mark Lucente, C. J. Sparrell, V. M. Bove, I. Tamitani. "A hardware architecture for rapid generation of electro-holographic fringe patterns" SPIE Proc. Vol. #2406: Practical Holography IX, 2406-23, (SPIE, Bellingham, WA, 1995). Other

references located on our publications page...

|