Haptics and Spatial Interaction

In our haptics & spatial interaction work, we use the Phantom(TM)force feedback device , a three degree-of-freedom mechanical

linkage with a three d.o.f. passive gimbal, which supports a simple

thimble or stylus used by the hand. The Phantom is capable of

reporting the 3D position of the stylus or thimble tip and displaying

force to a person's hand. Using this device, a person can feel

computationally-specified shapes, bulk phenomena, and surface

properties within the work volume.

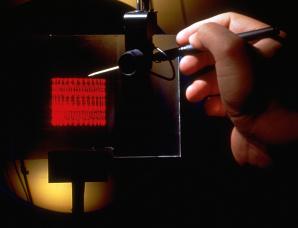

We are combining force-feedback with holographic stereograms

and holographic video to develop interactive holographic displays

that present vivid coincident visual and haptic cues to a user. The

haptic holovideo project allows a person to see, "feel", and

interactively modify very simple holographic images, and hints

at how spatial displays with haptic feedback might be used in the

future as design, training, and visualization tools.

In this system, the force

model is both spatially and metrically

registered with the free-standing

spatial image displayed by

holovideo, and a single

multimodal image of a cylinder to be

interactively

"carved" is presented. A

user can see the hand-held

stylus interacting with

the changing holographic

image while

feeling forces

that result from carving.

As the user

pushes the

stylus into the

simulated cylinder, its haptic

model deforms in

a non-volume-conserving

way, and a simple surface of revolution

can be fashioned. The

simulation behaves as a rudimentary lathe

or potter's wheel.

| The haptic model,

initially and in

subsequent stages of carving, is represented as a surface of revolution with two caps. It rotates about its vertical axis at one revolution per second. The model straddles a haptic plane which spatially corresponds with the vertical diffuser in the output plane of holovideo. Because it is not yet possible to compute a new 36MB hologram in real time, we use a set of pre-computed holograms to "assemble" the visual representation of the carved surface. As the holo-haptic image is carved, a visual approximation to the resulting surface of revolution is constructed by loading the appropriate lines from the set of pre-computed holographic images. |

|

By making only local line-level changes to the image instead

of recomputing the entire hologram at each visual update, we can

achieve near-real-time update of underlying model geometry

reflected in the holographic display.

|

data can be dispatched to a 3D printer to produce a physical hardcopy of the design. The output shown here was printed on a Stratasys 3D printer. |

Sponsors of this work include the Honda R&D Company, NEC, IBM,

the Digital Life Consortium at the MIT Media Lab, the Office of Naval

Research (Grant N0014-96-11200), the Interval Research Corporation

and Mitsubishi Electric Research Laboratories.

Ravikanth Pappu and Wendy Plesniak, "Haptic

interaction with holographic

video

images" Proceedings of the IS&T/SPIE's Symposium on Electronic

Imaging, Practical Holography XII,

January 1998.

Wendy Plesniak and Ravikanth Pappu, "Coincident

display using haptics

and

holographic video" accepted for publication in the Proceedings of

ACM

SIGCHI Conference on Human Factors

in Computing Systems, April 1998.

Wendy Plesniak and Michael Klug, "Tangible

holography: adding synthetic

touch

to 3D display," Proceedings of the IS&T/SPIE's Symposium on

Electronic Imaging, S.A. Benton,

ed., Practical Holography XI, February

1997.

photos: Webb Chappell and Wendy Plesniak

© MIT Media Laboratory - Spatial Imaging Group