| ||

|  | |

| Recent advances in human-computer interface technologies create the potential to open doors for individuals with disabilities. A significant problem for designing assistive aids is the large degree of individual difference between potential users of a technology. Such large variations make it difficult to design a single interface which works for everyone. Prohibitively high clinical costs of assessing individuals and designing personalized interfaces can be mitigated by creating interfaces which tune to a user's idiosyncratic abilities and preferences thus eliminating the need for conforming to fixed interface protocols. We are building communication aids which learn to map the user's vocalizations and body movements into machine actions enabling the user to control speech synthesizers, appliances, and other devices which they encounter in their daily activities. | |

|

|

Context-Aware Adaptive Communication Aid

We are currently developing a new communication system which a dynamic touch display with situation-grounded lexical prediction. |

|

|

Learning to map Prosody to Small-talk Phrases

This prototype learns to translate a small set of vocalizations into clear speech. It is meant to be used by individuals with severe speech disorders. Each symbol of the touch screen is hard wired to a pre-recorded phrase. Initially the user touches a symbol to activate the associated phrase. The user can also vocalize while touching a symbol. A learning algorithm searches for consistent aspects of the vocalizations which may be used to identify the intended phrase. Over time the system maps vocalizations onto phrases eliminating the need for the touch tablet. A speech only interface is preferred by many individuals who prefer to use what little vocal control they have to communicate since it allows face-to-face contact and is socially more compelling than having to point to a picture board. |

|

|

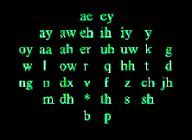

Speech Display

This display was designed for individuals with hearing disabilities. A recurrent neural network continuously estimates phoneme probabilities of input speech in real time and sets the brightness of each display element in proportion to its probability. Deb Roy and Alex Pentland. (1998) "A Phoneme Probability Display for Individuals with Hearing Disabilities". Assets'98. postscript (2.0M) pdf (118K) |

This material is based upon work supported by the National Science Foundation under Grant No. 0083032. Any opinions, findings, and conclusions or recommendations expressed in this material are those of the author(s) and do not necessarily reflect the views of the National Science Foundation." |

|