|

||

|

|

|

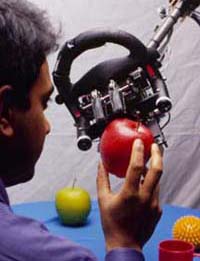

Trisk Trisk is our newest robot, still under construction, that is designed to have advanced active touch and active vision abilities. Trisk consists of three 6-DOF arms actuated by harmonic drives. Two arms terminate in three-fingered hands (4 DOFs per hand -- we are using Barrett hands). Each finger terminates in 6-DOF torque-force sensors. The third arm (Trisk's "neck") terminates in an active vision head with two cameras, each mounted on 2-DOF motorized bases. All arm DOFs include both position and torque sensors enabling force-controlled compliant control, just like Ripley. In total the robot has 30 DOFs. With Trisk, we will explore the philosophy of "vision as touch at a distance" and its implications on language grounding and active learning. More information about Trisk |

|

Ripley Ripley is a custom-built, 7-DOF robot terminating in a "head" that consists of a 1-DOF gripper and a pair of video cameras. More information about Ripley |

|

Hermes Hermes is a mobile robot with twin motor drives and a 2-DOF gripper. Sensors to guide navigation include Lidar and sonar range finders, color video, and bump sensors. The robot has on board computers for low level sensory-motor processing, and a wireless network link for communication with back-end controllers. With Hermes, we are exploring the representation of space, paths, and movement in terms of sensory-grounded affordances.

|

|

Elvis Elvis is an 8-DOF robot chandelier. It has 4 "tentacles of light": halogen lamps actuated by 2-DOF swivel-and-rotate servos with dimable control over each lamp. The central dome houses a fifth lamp and a fish-eye camera that lets Elvis sense his environment. This robot demonstrates the introduction of interactive robot technology into the home environment.

|

|

Toco Toco is an 8-DOF robot that supported experiments in active vision, language acquisition, andsituated human-robot interaction. A miniature camera is mounted in one of Toco's eyes (the same kind of camera that is used in Ripley and Trisk). Toco's mouth, eyes, and features are actuated by micro servos and were used for visual feedback of Toco's internal control system. |

|

|

|

|

The Restaurant Game The Restaurant Game is a research project that will algorithmically combine the gameplay experiences of thousands of players to create a new game. We will apply machine learning algorithms to data collected through the multiplayer Restaurant Game, and produce a new single-player game that we will enter into the 2008 Independent Games Festival. Everyone who plays The Restaurant Game will be credited as a Game Designer. |

|

Collaboration & Planning Sim We are using the Torque game engine (http://www.garagegames.com) to explore collaboration between humans and synthetic characters. In a cooperative puzzle solving scenario, we employed a context free grammar based plan recognizer to allow a synthetic character to understand ambiguous spoken language (such as "Can you help me with this?"). We have also developed STRIPS and HTN planners for unified planning of navigation, action, and communication. Characters use these planners to simultaneously control autonomous character behavior, and to infer a human player's goals by planning from the human's perspective. Characters that understand the human's goals and plans can move beyond the master-slave relationship, and become true collaborative partners in work or play. In the future, we will use this platform as a large scale data collection system for natural language in real world situations. Natural language provides a much richer interface for communication with synthetic characters than a mouse or gamepad, and empowers players who are already using text and voice to communicate with other human players in multiplayer environments. |

|

Navigation Sim Our navigation simulator allows us to explore systems that understand spatial language without the overhead of a physical robot. It is based on the open source racing game TuxKart . The simulated vehicle outputs sensor data from LIDAR distance sensors and a compass, and can be controlled by setting its velocity and turn rate. We plan to add bump sensors, motor stall detectors, and sonar. The simulator can also output a recording of the vehicle's motion throughout a session and play back the recording later. This feature allows us to use the simulator in user studies, recording user interactions with the system for later analysis. |

|

|

Social Sim Current day multi-user graphical role playing games provide a rich interaction environment that includes rooms and exterior areas, everyday objects like chairs, doors and chests, possessions, character traits and other players' avatars. All of these can be acted upon by a player, be it through taking direct action on the world or through speaking with other players. We are using a commercial game, Neverwinter Nights ( http://nwn.bioware.com ), that ships with an editor allowing the creation of custom game worlds and has a large and active online player base. We have instrumented the game, so that we can collect not only the text users type, but also their movements and actions, such as item pick-ups and drop-offs, doors opened and levers pulled. Furthermore, the game world can be scanned for object and room locations. The data therefore consist of complete records of the game situation, physical changes to the situation, player actions and player text messages. In addition to the online collection that only includes typed text, we also perform in-lab data collection to record players' time synchronized speech instead of text messages. We are now designing maps for this game that elicit the type of physical, social and planning interactions that let us ground natural language in a rich situational model. |

|